June 2003, Issue 91 Published by Linux Journal

Front Page | Back Issues | FAQ | MirrorsThe Answer Gang knowledge base (your Linux questions here!)

Search (www.linuxgazette.com)

Editor: Michael Orr

Technical Editor: Heather Stern

Senior Contributing Editor: Jim Dennis

Contributing Editors: Ben Okopnik, Dan Wilder, Don Marti

![[tm]](../gx/tm.gif) ,

http://www.linuxgazette.com/

,

http://www.linuxgazette.com/

|

...making Linux just a little more fun! |

From The Readers of Linux Gazette |

compressed tape backups

compressed tape backupsHi TAG's,

quite a while back I remember a discussion on compressed tar archives on tape and the security risk, i.e. the data would be unrecoverable behind the first damaged bit.

Now at that time I knew that bzip2, unlike gzip, is internally a blocking algorithm and it should be possible to recover all undamaged blocks after the damaged one.

Test RESULTS:

tar archive of 90MB mails, various size, mostly small

tar -cvjf ARCHIVE.tar.bz2

bvi to damage the file at about 1/3 (just changing a few bytes)

tar -xvjf ARCHIVE.tar.bz2

produces an error and refuses to continue after the damage. --ignore-failed-read doesn't help at all, neither -i

running bzip2recover produces a set of files rec00xxFILE.tar.bz2 decompressing them individually and cat all good ones into tar:

tar produces an error where the data are suddenly missing, skipping to next file header, but it's not recovering anything beyond the error. It seems it's unable to locate the next file header and simply skips through the remaining file. I also tried to run tar on the decompressed blocks after the error only -- same result: It's skipping till next file header, doesn't find one and ends with an error.

In my tar "tar (GNU tar) 1.13.18" I discovered the following option (man page):

--block-compress

this option is non-existent in "tar --help" and running:

tar -cvzf ARCHIVE.tar.gz --block-copmress

says:

tar: Obsolete option, now implied by --blocking-factor

Writing archives with --block-copmress and/or --blocking-factor 2[0] does not improve things very much. several times with gzip and a blcoking of 2, i.e. 1kB), I was lucky and the error was in one large mail (attachement). In that case tar was able to locate the next file header and I lost only the one damaged mail. I introduced some more damaged blocks and suddenly tar was skimming through the remaining tar-file again without recovering any more files.

Fazit:

- seems still to be highly risky to use compression on tapes archives

- blocking improves chances -> use a very small blocksize.

One question remains: Can some flag improve the tar behaviour in locating the next file header? I couldn't find one in either tar --help nor the man page.

I also start wondering what tar says to several unreadable tape blocks and how it's going to locate the next file headers after that.

I'm ordering the head cleaning tape I think....

K.-H.

Daemon vs CGI spawning processes

Daemon vs CGI spawning processesDear all,

recently, I switched from using CGI to run a program to using the SOAP-Lite 0.55 XML-RPC Daemon to run the same program.

The only noticeable difference between using the two, is that using CGI, the web page reloaded straight away, but with the new daemon, the web page waits for the program to finish before reloading.

I have no idea about CGI and perl daemons, so I'm writing to this list to ask about processes. It seems to me that the Httpd daemon (Apache2) will spawn it's own CGI process that handles the program independently, whilst the self-created daemon doesnt.

Im posting the daemon's code below if it helps.

See attached soap-daemon.Seaver.pl.txt

Compiling qt 3 lib

Compiling qt 3 libI'm wondering if someone might have an idea about what's going wrong with my effort to compile ver 3 of the qt lib. I DL'd the source and unpacked to a dir under my user normal user's home dir, and ran configure with a few options specified. It completed normally w/o error. But when I run make from the same dir, it errors out immediately:

Insp8000:~/Builds/qt-x11-free-3.1.2 > make make[1]: Entering directory `/home/jkarns/Builds/qt-x11-free-3.1.2' cd qmake && make /bin/sh: cd: qmake: No such file or directory make[1]: *** [src-qmake] Error 1

I should mention that I didn't intend to address the question so much toward qt explicitly, but rather I'm wondering if the problem might be due to peculariarities of gmake, or some other system configuration issue - I guess I'll look into updating gmake on this machine. I've run into similar problems when compiling other pkgs, although most pkgs compile w/o a problem.

VP and net load equation

VP and net load equationHello,

Is it possible in a VPN based network. To get distribution of Net load on the side of the Linux servers so that each Client get (the client conect thru a VPN Tunnel through a Wierless Network) the same speed in the Internetconection

Best regard

Bernhard Schneider

Linux Gazette entry in Wikipedia

Linux Gazette entry in WikipediaI've added a stubby entry to Wikipedia (http://www.wikipedia.org/wiki/Linux_Gazette). Anyone care to expand on it?

[Jason] Hmmm....are you sure the wikipedia folks like that sort of thing?

http://www.wikipedia.org/wiki/Wikipedia_is_not_a_dictionary

Yeah, I think it's OK. I've gone more for encyclopaedic information than a mere definition, even if it is a stub. Starting a stub is encouraged - an extreme version of how a stub can grow from a definition (from FOLDOC) is here http://www.wikipedia.org/w/wiki.phtml?title=PS/2&action=history - in the space of one hour it changed completely, and grew to about 4 times the original size.

Anyway, I cite precedence http://www.wikipedia.org/wiki/Macworld

Home Network Internet Connection Sharing

Home Network Internet Connection SharingHi,

Thanks for you generous help. You must be very good-hearted people.

[Thomas] Yes, we are

[Ben] Thank you for the compliment, doctor. We're all here for a number of reasons, but I have to agree with you to this extent: everyone who has stayed with The Gang over the long term has earned my respect for their demonstrated willingness to give their time to this endeavor. If you believe, as I do, that Linux is improving the world by reducing the amount of chaos in the world of computers, then all of us have contributed to making this world a better place.

I think I have identified an area of need: I have used RHL for years, and am now getting a few machines around the place for different uses including software and hardware testing. I'd like to set up a network at home, which I am finding very difficult because my USB port has taken over my eth0 and the configuration tools won't let me save anything...

[Ben] Could you clarify that, please? eth0 is an Ethernet network interface; USB is a completely separate physical entity that, as far as I know, shares almost nothing with it. I would suggest that you carefully read "Asking Questions of The Answer Gang" at

<http://www.linuxgazette.com/tag/ask-the-gang.html>;

particularly the part about "Provide enough, but not too much information". Simon Tatham's page, linked there, is a really good guide to effective bug reporting and following it will benefit you when asking questions in technical fora.

[Thomas] How do you mean "taken-over"? Indeed, USB and "eth0" (which I'm transliterating to meaning your NIC (Network Interface Card) should be two separate issues (that is unless your NIC is USB based, which is obsured.....).

But the real area of need I think is sharing an internet connection. In Australia we have cable modems and ASDL as well as dial up modems, and I noticed Mandrake just has a button for this! RHL is much more terse.

[Thomas] Tut, tut -- what you are describing here is a difference in the GUI configurations of the two different distributions, essentially the underlying information about each network IP, interface, etc, is stored in the same configuration files in "/etc"

[Ben] Linux is based on understanding the underlying mechanisms rather than just "pushing the button" - whatever buttons may exist in specific distros. The process of sharing a net connection is not a difficult one, and is domented in the Masquerading-Simple-HOWTO, available at the Linux Documentation Project <http://www.tldp.org/>;. Read it and understand it, and you'll find that sharing a Net connection is very easy indeed.

I'll copy this to RHL, too, so they know the difficulties I'm having.

[Thomas] I wouldn't bother -- RH are most likely not concerned with helping you setup your network.

On the contrary, if changing something minimal about their installer would win them a few people more from one of the other distros, they might be inclined to make that easier. Also, if they never hear complaints they have to assume it's all good, right? -- Heather

It is hard to find authoritative info about this.

Thank you again,

Julian

[Ben] Not really. The Answer Gang's Knowledge Base containg this information; searches of the Net (I use Google but any search engine will find this) will come up with hundreds of thousands of hits. The trick is to search for knowledge on the topic rather than a button to push.

[Thomas] Your question is extremely loose -- what exactly do you want, what type of network? I only use PLIP, but that is only because I don't have any NIC's at the moment.... I suspect that this approach in networking is not what you want.

Please take a look through the past issues of the linux gazette - we have a search engine at the main site:

http://www.linuxgazette.com

and especially though the knowledge base (above).

When you can refine your question a little more, please let us know

I read your "How to Create a New Linux Distribution: Why?"

I read your "How to Create a New Linux Distribution: Why?"This was a TAG thread in issue 39, quite a long time ago. The number of distros has increased drastically, but the need to ask "Why?" before sprouting a new one hasn't changed - in fact, if anything, it's gotten more important than ever... -- Heather

I have a similar idea. However, I don't know if I would go as far as calling it a distribution. All I want is to semi-duplicate an environment I have set up.

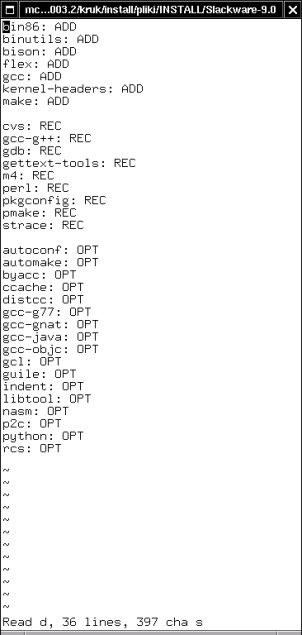

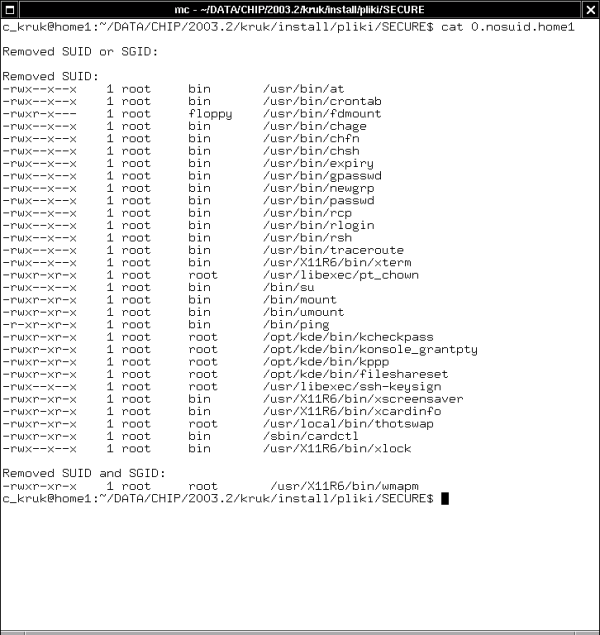

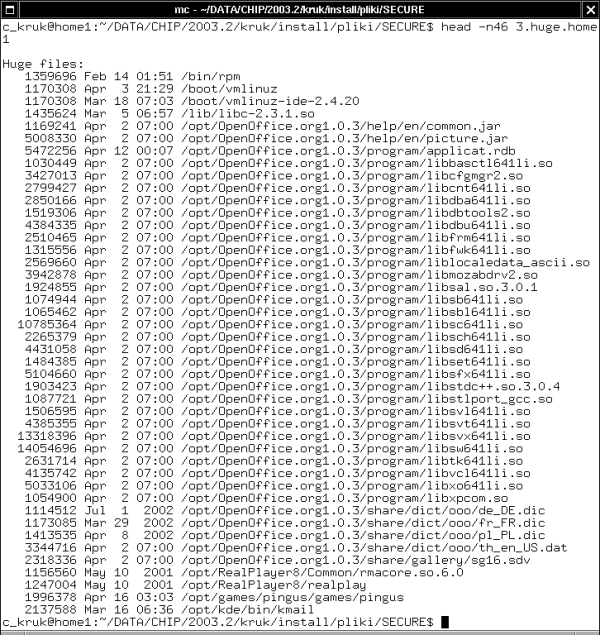

I would like to somehow create an installable version of my slackware system. Not a ghost but one where you can alter partitions and select (auto select) nic, MB-features etc, upon installation.

[Thomas] Installable version??? Hmmm, how do you mean? My first ever distribution I used was slackware 2.0, and that was installable. I disgaree with your methods. Tweaking partitions upon installation is perhaps fatalistic, especially if you don't know what you're doing. And in anycase, what is it that you're trying to achieve? I'd have said that most Linux distro's do a damn good job at installing Linux.

I'd be inclined to use a chroot first so that you can test it before you go live. Unfortunately, I don't have enough experience / knowledge to provide you with that. Heather Stern may well pipe up, I know that she does exactly that all the time, using chroot.

Yes - I either set aside a whole partition (for a "one big slash" installation of the given type) or prepare a file and format it as ext2 (for loopback mounting) then only mount the given environment when I need it. Compressed instances of the loopback version can serve as nice backups or baselines for fresh installs on a lab system. I often make a point of leaving bootloader code out of them, though; something I need to back in when preparing those same lab boxen. -- Heather

Do you know a good way to do this or maybe just some pointer on where and how I should get started?

Many thanks if you take the time to answer this.

Best regards - Jon

Ps. Do I need to subscribe to receive the answer? Ds.

[Thomas] Nope, by common courtesy, we always CC the querent (that's you).

Sending people their reply directly, they get it right away, and it's nice that they can see their answer even if their thread doesn't make it into the magazine.

I believ the set of scripts called YARD aims at being something like what you want; visit Freshmeat.Net to look it up. YARD stands for "yet another rescue disc" and is about rescuing the specific system in front of you, instead of just being a general case utility disc like Tom's Rtbt, LNX-BBC, superrescue, etc. -- Heather

[Kapil] You should take a look at mindi which tries to create a distribution out of an existing installation. It runs from a Live CD but can also be installed so that takes care of your "partitioning" issue (perhaps you need "mondo" to actually install your home dirs and so on). To handle hardware detection such as nic,video etc. you must install "discover" or "kudzu" and after that (As far as I can see) you are on your own.

Liunx Gazette in Palm Format

Liunx Gazette in Palm Format

First off I'd like to say that the magazine is excellent, I've only just

come across it. I've been using Linux for around 5 years and there are still

some good hints and tips to be found!. Just a suggestion but any possibility

of a plucker version of your mag? I read alot on my palm and this would be

most useful, I have found that the downloadable HTML version of each mag has

links in the contents page that don't resolve within the document but to

seperate files on the server thus making conversion awkward i.e the contents

page links don't resolve

![]()

James Herbert Senior Software Engineer

[Mike] I assume you mean TWDT.html in each issue. Yes, we can assemble it using a custom TOC page with internal links. It may take a couple months till we get around to it though.

The way it's put together is by merging the fragment articles and columns along some fairly plain "startcut"/"endcut" blocks in the templates ... except for The Answer Gang, where I provide a TWDT edition for the back end.

But to solve his actual problem, he really wants to check out Sitescooper (www.sitescooper.org) and pick up the regularly prepared scoop of the LG issue. I hope they keep 'em up to date. It occurs to me that maybe we should list them on the mirrors page. That's http://scoops.sitescooper.org and it's available in 3 different Palm friendly formats. Plus sitescooper is open source - just download and have funEven flavors for MacOS and Windows users, though it's worth noting you need a working copy of perl. -- Heather

[Mike] If there's anything else required to put it into Palm format, send us a HOWTO if there's one available. However, that might work better as a script on your end that downloads the issue (perhaps the FTP file) and converts it to plucker format, whatever that is. Since we have so many versions of the same thing already (web files, FTP tarball, TWDT, TWDT.txt), and only a few readers have Palms.

[Ben] You can use "bibelot" (available on Freshmeat, IIRC); it's a Perl script that converts plaintext into Palm's PDB format. I have a little script that I use for it:

See attached pdbconv.bash.txt

This uses the textfile name (sans extension) for the new file name and the PDB internal title, and does the right thing WRT line wrapping. Converting the TWDT would require a single invocation.

Does the raw PDB format have a size limit? Our issues can get pretty big sometimes... -- Heather

Your web site

Your web siteHi

I've been an LG reader for 5 years now, and a year (or maybe more) ago you changed the web site. I really preferred the old site. Why?

Hmmmm it's hard to place a finger on it. One definite thing I miss is that I used to love having the really big index, which would show you a huge table of contents, with the table of contents of every issue listed.

[Mike] That is still around, but it's called "site map" now. There's a link on the home page, or bookmark the direct URL:

http://www.linuxgazette.com/lg_index.html

I'm blind and use a screen reader, and I could use my screen reader's search facility to find topics -- if I wanted to know about ncurses, I just search for that, and would hear the latest article which had ncurses in the title. Pressing a single key again and again would take me to all articles with ncurses, for example, in the title. Can this be reintroduced? I know the search feature does something similar, but I still think it makes it harder (for me) to find what I want. That's the main thing I can think of right now, but I'll keep you informed if I thik of the other little things.

But with regards to the content of the magazine - it's excellent, and the archives are a wonderful resource.

Saqib Shaikh

[Thomas] You're quite welcome

|

...making Linux just a little more fun! |

By The Readers of Linux Gazette |

Reading email headers

Reading email headersHey, all -

A while ago, someone asked me how to read email headers to track a spammer (Karl-Heinz, IIRC.) I kinda blew it off at the time (ISTR being tired and not wanting to write a long explanation - sorry...) Lo and behold, I ran across this thing on the Net - it's an ad site for a piece of Wind0ws software which tracks (and maps the track - sooo cuuute!) the path an email took based on the headers. The explanation there is a rather good one; it's pretty much how I dig into this stuff when I get a hankering to slam a couple of spammers (yum, deep-fried with Sriracha sauce... I know, it wrecks my diet, but they're so nicely crunchy!)

The equivalent Linux tools that you'd use to do what these folks have to write commercial software for are laughably obvious. Anyway - enjoy.

<http://www.visualware.com/training/email.html>

The same company puts out a 'traceroute' program that plots each hop on a world map. Cute. Anyway, a google for:

http://www.google.com/search?q=how+to+read+email+headers

returns a fair amount of articles.

Jason Creighton

Just to make it clear, Ben's talking about some mswin software, and I dunno if he checked that it runs under WINE. But between following Jason's advice, and xtraceroute (http://www.dtek.chalmers.se/~d3august/xt) - our toy for traceroute on a world map - the world of free software should be able to come up with a similar tool. A curious tidbit is that IP addresses whose ranges aren't known to the coordinate system end up at 0,0, the center of Earth's coordinate system... deep underwater in the Atlantic Ocean, near Africa. I wouldn't be too surprised if a lot of spammers live there. Good spear-fishing, fellow penguins. -- Heather

colorful prompt sign

colorful prompt signHi all,

I have seen a colorful prompt sign in RH 9.0 box at a local computer book

shop today. but the operator ( who has recently taken migration from M$ to

Linux ) has told me that she doesn't know how to do this as the shop has

purchased the machine with RH 9.0 preloaded ( & also with that colorful

prompt -

![]() ). so could some one please tell me how to do this ?

). so could some one please tell me how to do this ?

which answers your question above

![]()

About autofs and write permissions for floppy

About autofs and write permissions for floppyI have just configured /etc/auto.master and /etc/auto.floppy. I can now access the floppy without the need to mount it before. But I don't have write access to it. Only root has write access to my floppy.

here are the files I configured:

/etc/auto.master -

/mnt/cdrom /etc/auto.cdrom --timeout=60 /mnt/floppy /etc/auto.floppy --timeout=30

/etc/auto.floppy -

floppy -users,suid,fstype=vfat,rw :/dev/fd0

Did I something wrong? What did I forget?

Thank you in advance for all information you could provide.

Elias Praciano

[Kapil] The automatically mounted filesystems are mounted by the autofs daemon which runs as root and thus a "user" entry will cause files to be owned by "root".

One solution is to use the "mount" command as the user to mount the floppy.

Another solution is if the floppy is a dos floppy to put "umask=666" as a mount option.

[Thomas] I absolutely hate "autofs". I cannot stand it! How difficult can it be to either type: "mount" or "umount"?? Still, each to their own I suppose

Am I right in assuming that autofs overrides /etc/fstab in some way? Or is it that you specify "autofs" as the filetype within /etc/fstab ? Either way it shouldn't really matter.

To be on the safe side, I would just make sure that the entry for your floppy drive in "/etc/fstab" is genuine. By that I mean that you should check that the options:

exec rw

are present.

IIRC, "supermount" used to do ...

[Jimmy] Oh no! Supermount is evil! Especially for floppies. supermount tries to figure out when the disk has changed, and mostly fails.

[Thomas] If these suggestions still generate the same problem, please post us a copy of your "/etc/fstab".

Ah....I mentioned it because I vaguely remember John Fisk mentioning it in one of his Weekend Mechanic articles a long time ago.

Personally, I don't see why you don't just issuse:

mount umount

or even better, use "xfmount /dev/abc"

since as soon as you close "xftree", the device is umounted

[Ben] I use a series of scripts (all the same except for the device name) called "fd", "cdr", and "dvd" to mount and unmount these:

See attached dvd.sh.txt

I could probably have one script like this with a bunch of links, and use the name as the device to mount, but I'm too lazy to change something that's worked this well and this long.

Thank you all!

Rahul's solution solved my problem. I added myself to the group 'floppy' and changed the mountpoint group to 'floppy'. Then I changed the file auto.floppy to:

floppy -users,gid=floppy,fstype=vfat,rw,umask=002 :/dev/fd0

It's working fine now!

Thank you again. I learned a lot with you.

Best regards!

linux infrared

linux infraredhi. I'm using the circuit described there and it works great in linux with lirc. Another programs that you will probably find useful are:

lirc-xmms-plugin smartmenu irmix xosd

and to recompile mplayer with lirc support. The circuit cost me ~ 3$ (without the tools that I already had). Hope that I helped. If you need more informations mail-me.

A disabled querent asked about LIRC in general ... -- Heather

[JK Malakar] nice to hear your question on LIRC. yse I have made the home-brew IR receiver which is easy to build as well as cheap also. now I can enjoy MP3, MPlayer, xine etc and even shutdown the machine using my creative infrasuite cd drive remote -

you will get everything at http://www.lirc.org

[Robos] For more infos about how and if you have a question I would say go and ask the source: the lirc page has also a mailing-list where you can surely ask some competent people.

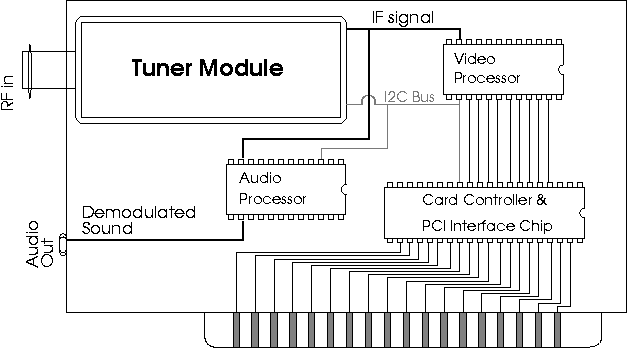

OK, now your question: I have looked at LIRC myself AGES ago and wanted to build that thing too. Didn't do it, mind you (forgot) but I think the hardware and software part were quite well documented. I looked again just now and this here http://www.manoweb.com/alesan/lirc looks really nice and easy. If you think you have problems with homemade stuff try either a TV card (can be had for as little as 50Euros here in Germany), quite a lot of them feature a infrared port already and are quite easy to set up (and you have the benefit of watching and recording TV tooor there are also some irda-adapters for all ports (parallel, serial, even usb) to buy, but I think they are more expensive than a tv card.

On A Slower Computer

On A Slower ComputerIn reference to Help Wanted #3, Issue 90 -- Heather

On a slower computer...

Now, small distros and distros-on-floppy we have by the dozens. But RH 8 compatible? Or kickstart floppies that chop out a bunch of that memory hogging, CPU slogging stuff? An article on keeping your Linux installers on a diet would be keen. Just in time for Summer, too. -- Heather

Definitely check out the RULE project (http://www.rule-project.org/en). They have installers for Red Hat 7.x and 8.0 for low memory and older processor machines. I have personally used it to install a minimal RH 7.3 system on a P75 with 16MB of RAM. Great stuff!

-- William Hooper

[Thomas Adam, the LG Weekend Mechanic] Indeed, WilliamI contibute to this project, since I myself use archaic technology

I'm in the process of writing some docs for installing XFree86 on a 486 with 16MB Ram using FVWM2.

I leave out the byplay of one-downmanship as Answer Gang folk chimed in with the older and slower machines of yesteryear which either gave them their start into Linux or still operate as some kind of server today. The winner and new champeen of Lowball Linuxing is Robos, who wondered why his 486/33 notebook with 16 MB RAM was even slower than its usual glacial self - since all but 4 MB of the memory had come a little loose and X had come up anyway. The winning WM for low end systems seems to be FVWM, with a decent place for IceWM, and a surprise showing for E - provided you use a theme on a serious diet. K is not recommended, and we don't exactly recommend Gnome unless it's a quiet and lazy day for you, either... -- Heather

Interesting take on C/C++/etc. by Jon Lasser

Interesting take on C/C++/etc. by Jon LasserI think C is used as often as it is because it's the lowest common denominator - write a library in C, you can use it from any other language. It won't be the same for any of the scripting languages until Parrot is widespread.

In case anyone's interested, I came across these links --

Linux Journal Weekly News Notes - Tech Tips

Linux Journal Weekly News Notes - Tech TipsIt's always inconsiderate to quote more of someone's posting than you have to in a mailing list. Here's how to bind a key in Vim to delete any remaining quoted lines after the cursor:

map . j{!}grep -v ^\>^M}

where . is whatever key you want to bind.

If you want to train a Bayesian spam filter on your mail, don't delete non-spam mail that you're done with. Put it in a "non-spam trash" folder and let the filter train on it. Then, delete only the mail that's been used for training. Do the same thing with spam.

It's especially important to train your filter on mail that it misclassified the first time. Be sure to move spam from your index to your spam folder instead of merely deleting it.

To do the training, edit your crontab with crontab -e and add lines like this:

6 1 * * * /bin/mv -fv $HOME/Maildir/nonspam-trash/new/* $HOME/Maildir/nonspam-t rash/cur/ && /usr/local/bin/mboxtrain.py -d $HOME/.hammiedb -g $HOME/Maildir/no nspam-trash 6 1 * * * /bin/mv -fv $HOME/Maildir/spam/new/* $HOME/Maildir/spam/cur/ && /usr/ local/bin/mboxtrain.py -d $HOME/.hammiedb -s $HOME/Maildir/spam

Finally, you can remove mail in a trash mailbox that the Bayesian filter has already seen:

2 2 * * * grep -rl X-Spambayes-Trained $HOME/Maildir/nonspam-trash | xargs rm - v 2 2 * * * grep -rl X-Spambayes-Trained $HOME/Maildir/spam | xargs rm -v

Look for more information on Spambayes and the math behind spam filtering in the March issue of Linux Journal.

It's easy to see what timeserver your Linux box is using with this command:

ntptrace localhost

But what would happen to the time on your system if that timeserver failed? Use

ntpq -p

to see a chart of all the timeservers with which your NTP daemon is communicating. An * indicates the timeserver you currently are using, and a + indicates a good fall-back connection. You should always have one *, and one or two + entries mean you have a backup timeserver as well.

In bash, you can make the cd command a little smarter by setting the CDPATH environment variable. If you cd to a directory, and there's no directory by that name in the current directory, bash will look for it under the directories in CDPATH. This is great if you have to deal with long directory names, such as those that tend to build up on production web sites. Now, instead of typing

cd /var/www/sites/backhoe/docroot/support

you can add this to your .bash_login

export CDPATH="$CDPATH:/var/www/sites/support/backhoe/docroot"

and type only

cd support

This tip is based on the bash section of Rob Flickenger's Linux Server Hacks.

In order to store persistent preferences in Mozilla, make a separate file called user.js in the same directory under .mozilla as where your prefs.js file lives.

You can make your web experience seem slower or faster by changing the value of the nglayout.initialpaint.delay preference. For example, to have Mozilla start rendering the page as soon as it receives any data, add this line to your user.js file:

user_pref("nglayout.initialpaint.delay", 0);

Depending on the speed of your network connection and the size of the page, this might make Mozilla seem faster.

If you use the Sawfish window manager, you can set window properties for each X program, such as whether it has a title bar, whether it is skipped when you Alt-Tab from window to window and whether it always appears maximized. You even can set the frame style to be different for windows from different hosts.

First, start the program whose window properties you want to customize. Then run the Sawfish configurator, sawfish-ui. In the Sawfish configurator, select Matched Windows and then the Add button.

You can't include web documents across domains with SSI, but with an Apache ProxyPass directive you can do it to map part of one site into another.

You don't need to pipe the output of ps through awk to get the process ID or some other value you want. Use ps --format to select only the needed fields. For example, to print only process IDs, type:

ps --format=%p

To list only the names of every program running on the system, with no duplication, type:

ps ahx --format=%c | sort -u

If you have an ssh-agent running somewhere on your system and want to use it, you can get the SSH_AUTH_SOCK environment variable from one of your processes that does have the agent's information in its environment:

for p in `ps --User=$LOGNAME --format=%p`; do export `strings /proc/22864/environ | grep SSH_AUTH_SOCK` && break; done

This is handy for cron jobs and other processes that start without getting access to ssh-agent in the usual ways.

Greetings from Heather Stern

Greetings from Heather SternSummer's looking bright and beautiful, the world is alive with free software, and we had oodles of good questions this month...

...many of which were in the LG knowledge base already. I think we had a record number of pointers back to Ben's writeup in Issue 63 about boot records.

...some of which were from students who've put their thinking caps on, and are now asking the kind of considered questions their professors can be proud of. Us too. These kind of students are the ones who will drive computer science into new nooks and crannies that it hasn't spread into yet. (Cue the english muffin with fresh butter. Yum.) May they graduate with high honors and a number of cool project credits under their belt.

I spent Memorial Day weekend at a science fiction convention - readers who've been keeping up know I mentioned this last month - so here's how we did. Linux seems to have all the web browsers anyone could use, and then some. Good. We've gotten much better at having sound support, and handling those whacky plug-ins sites seem to like to use. Our little netlounge was about half Macs, and there are a few people whose prejudices about what the GUI ought to work like drove them into Linux' arms - and they were pretty okay with that. Good stuff, Maynard.

Except for the folks who had to deal with office software and an office-like feature set. Floppy support under Linux desperately confused people - if it auto mounted, they couldn't figure out how to make it let go of a floppy safely (and of course, these are PCs, so they'll cheerfully let go of the floppy unsafely). If they weren't, they couldn't figure out how to use a floppy without technical assisitance. Mtools are great but only if you already know about them. And they suck for letting someone save things straight onto the floppy.

Word processors still seem to be flighty and fragile creatures. I saw not one but two of the beasties die and take a document with it just because the user wanted to switch to landscape mode. The frustrated user stomped off in a huff; he won't be using Linux again all that soon. Spreadsheets default to saving files in their own whacky and hopelessly incompatible formats, with no particularly simple way to change that behavior visible from the configs. I mean, this is Linux; I'm sure it can sing sonatas if I tell it too. But I am the Editor Gal with a world of notes at my fingertips. These hapless folk who just wanted to mess with numbers and run a couple of printouts are not doing so well.

And don't get me started about setting up printing...

But hey, K desktop looks pretty. There are a decent number of users who will forgive the OS that looks pretty, because they can see that some effort is being put into it.

Me, I'd kind of like to see more programs defened themselves against imminent disaster, and at least pop up with some sort of error message, note that they can't safely use this feature yet, or the like. We've got too many good coders out there - we shouldn't be having to look at raw segfaults. Compared to that.... why, the Blue Screen of Death almost looks well documented and friendly.

Until next month, folks. And if your project does a little more sanity checking and cleaner complaints because you saw this, let us know, okay? I like to know when these little rants of mine make a difference. Trust me - it really will make Linux just a little more fun for folks at the keyboard.

Combining multiple PDFs into one

Combining multiple PDFs into oneFrom Faber Fedor

Answered By Ben Okopnik, Yann Vernier

From the chaos of creation

just the final form survives

-- The World Inside The Crystal, Steve Savitsky

We could have just posted the finished script in 2c tips. but there's juicy perl bits to learn from the crafting. Enjoy. -- Heather

![]() Hey Gang,

Hey Gang,

I was playing with my new scanner last night (under a legacy OS unfortunately) when I realized a shortcoming: I wanted all of the scanned pages to be in one PDF file, not in separate ones. Well, to that end, I threw together this quick and dirty Perl script to do just that.

The script assumes you have Ghostscript and pdf2ps installed. It takes two arguments: the name of the output file and a directory name that contains all of the PDFs (which have .pdf extensions) to be combined, e.g.

./combine-pdf.pl test.pdf test/

I'm sure you can point out many flaws with the script (like how I grab the command line arguments and clean up after myself), but that's why it's "quick and dirty". If/when I clean it up, I'll repost it.

See attached combine-pdf-faber1,pl.txt

[Ben] If you don't mind, I'll toss in some ideas.

See my version at the end.

#!/usr/bin/perl -w use strict;

Good idea on both.

# n21pdf.pl: A quick and dirty little program to convert multiple PDFs

# to one PDF requires pdf2ps and Ghostscript

# written by Faber Fedor (faber@linuxnj.com) 2003-05-27

if (scalar(@ARGV) != 2 ) {

You don't need 'scalar'. Scalar behavior (which is defined by the comparison operator) would cause the list to return the number of its members, so "if ( @ARGV != 2 )" works fine.

![]() Okay. I was trying to get ptkdbi (my fave Perl debugger) to show me the

scalar value of @ARGV and the only way was with scalar(). That's also

what I found in the Perl Bookshelf.

Okay. I was trying to get ptkdbi (my fave Perl debugger) to show me the

scalar value of @ARGV and the only way was with scalar(). That's also

what I found in the Perl Bookshelf.

[Ben] This is the same as "$foo = @foo". $foo is going to contain the number of elements in @foo.

my $PDFFILE = shift ; my $PDFDIR = shift;

You could also just do

my ( $PDFFILE, $PDFDIR ) = @ARGV;

Combining declaration and assignment is perfectly valid.

![]() Cute. I'll have to remember that.

Cute. I'll have to remember that.

[Ben]

chomp($PDFDIR);

No need; the "\n" isn't part of @ARGV.

$PDFDIR = $PDFDIR . '/' if substr($PDFDIR, length($PDFDIR)-1) ne '/';

Yikes! You could just say "$PDFDIR .= '/'"; an extra slash doesn't hurt anything (part of the POSIX standard, as it turns out).

![]() I know, but I really don't like seeing "a_dir//a_file". I always

expect it to fail (although it never does).

I know, but I really don't like seeing "a_dir//a_file". I always

expect it to fail (although it never does).

![]()

[Yonn] I'm no Perlist myself, but my first choice would be: $foo =~ s%/*$%/%;

Which simply ensures that the string ends with exactly one /.

[Ben]

That's one of the ten most common "Perl newbie" mistakes that CLPM wizards listed: "Using s/// where tr/// is more appropriate." When you're substituting strings, think "s///"; for characters, go with "tr///".

tr#/##s

Better yet, just ignore it; multiple slashes work just fine.

[Yonn] I did say I'm no perlist. Tr to me would be the translation tool, for replacing characters, including deletion.

[Yonn] Yep; that's exactly what it does. However, even the standard utils "tr" can _compress strings - which is exactly what was needed here (note the "s"queeze modifier at the end.)

[Yonn] Thank you. It's a modifier I had not learned but should have noticed in your mail. The script would have to tack a / onto the end of the string before doing that tr.

[Ben] You're welcome. Yep, either that or use the globbing mechanism the way I did; it eliminates all the hassle.

for ( <$dir/*pdf> ){

=head

Here's the beef, Granny! :)

All you get here are the specified files as returned by "sh".

You could also use the actual "glob" keyword which is an alias for the

internal function that implements <shell_expansion> mechanism.

=cut

# Mung individual PDF to heart's content

...

}

[Yonn] I don't know how to apply it to the end of the string, which is very easy given a regular expression as the substitute command uses. I'm more used to dealing with sed. Remember, the input data may well look like "/foo/bar/" and not just "bar/".

[Ben] You can't apply it to the end of the string, but then I'd imagine Faber would be just as unhappy with ////foo/////bar////. "tr", as above, will regularize all of that.

[Ben]

opendir(DIR, $PDFDIR) or die "Can't open directory $PDFDIR: $! \n" ;

Take a look at "perldoc -f glob" or read up on the globbing operator <*.whatever> in "I/O Operators" in perlop. "opendir" is a little clunky for things like this.

`$PDF2PS $file $outfile` ;

Don't use backticks unless you want the STDOUT output from the command you invoke. "system" is much better for stuff like this and lets you check the exit status.

Note - the following is untested but should work.

See attached combine-pdf-ben1.pl.txt

![]() Thanks, I've cleaned it up and attached it. there's one thing that I

couldn't make work, but first...

Thanks, I've cleaned it up and attached it. there's one thing that I

couldn't make work, but first...

(now looking inside Ben's version)

die "Usage: ", $0 =~ /([^\/]+)$/, " <outfile.pdf> <directory_of_pdf_files>\n"

unless @ARGV == 2;

Uh, that regex there. Take $_, match one or more characters that aren't a / up to the end of line and remember it and place it in $0? Huh?

[Ben] Nope - it's exactly the behavior that Jason was talking about. "print" takes a list - that's why the members are separated by commas. The "match" operator, =~, says to look in whatever comes before it; "$_" doesn't require it.

print if /gzotz/; # Print $_ if $_ contains "gzotz" print if $foo =~ /gzotz/; # Print $_ if $foo contains "gzotz" print $foo if /gzotz/; # Print $foo if $_ contains "gzotz"

So, what I'm doing is looking at what's in "$0", and capturing/returning the part in the parens as per standard list behavior. It's a cute little trick.

I guess I will have to do this one soon in my One-Liner articles; it's a useful little idiom.

![]() I had to move a few things around to get it to work. I did have one

problem though

I had to move a few things around to get it to work. I did have one

problem though

#convert ps files to a pdf file system $GS, $GS_ARGS, $filelist and die "Problem combining files!\n";

This did not work no way, no how. I kept getting "/undefinedfilename" from GS no matter how I quoted it (and I used every method I found in the Perl Bookshelf).

[Ben] Hm. I didn't try it, but -

perl -we'$a="ls"; $b="-l"; $c="Docs"; system $a, $b, $c and die "Fooey!\n"'

That works fine. I wonder what "gs"s hangup was. Oh, well - you got it going, anyway. I guess there's not much of a security issue in handing it to "sh -c" instead of execvp()ing it in this case: the perms will take care of all that.

![]() To get it to finally work, I did:

To get it to finally work, I did:

#convert ps files to a pdf file

my $cmd_string = $GS . $GS_ARGS . $filelist ;

system $cmd_string

and die "Problem combining files!\n";

<shrug>

Anywho, here's the final (?) working copy:

See attached combine-pdf-faber2.pl.txt

[Ben] Cool! Glad I could help.

concurrent processes

concurrent processesFrom socrates sid

Answered By Jim Dennis

What are concurrent processes how they work in distributed and shared systems?Can they be executed parallel or they just give the impression of running parallel.

[JimD]

"concurrent processes" isn't a special term of art. A process is a program running on a UNIX/Linux system, created with fork() (a special form of the clone() system call under Linux). A process has it's own (virtual) memory space. Under Linux a different form of the clone() system call creates a "thread" (specifically a kernel thread). Kernel threads have their own process ID (PIDs) but share their memory with other threads in their process.

There are a number of technical differences between processes and kernel threads under Linux (mostly having to do with signal dispatching). The gist of it is that a process is a memory space and a scheduling and signal handling unit; while a kernel thread is just a scheduling and signal handling unit. Processes also have their own security credentials (UIDs, GIDs, etc) and file descriptors. Kernel threads share common identity and file descriptor sets.

There are also "psuedo-threads" (pthreads) which are implemented within a process via library support; psuedo-threads are not a kernel API, and a kernel need not have any special support for them. The main differences betwen kernel threads and pthreads have to do with blocking characteristics. If a pthread makes a "blocking" form of a system call (such as the read() or write()) then the whole process (all threads) can be blocked. Obviously the library should provide support to help the programmer avoid doing these things; there used to be separate thread aware (re-entrant) versions of the C libraries to link against pthreads programs under Linux. However, all recent versions of glibc (the GNU C libraries used by all mainstream Linux systems) are re-entrant and have clearly defined thread-safe APIs. (In some cases, like strtok() there are special threading versions which must be used explicitly --- due to some historical interactions between those functions and certain global variables).

Kernel threads can make blocking system calls as appropriate to their needs -- since other threads in that process group will still get time slices scheduled to them independently.

Other parts of your question (which appears to be a lame "do my homework" posting, BTW) are too vague and lack sufficient context to answer well.

For example: Linux is not a "distributed system." You can build distributed systems using Linux --- by providing some protocol over any of the existing communications (networking and device interface) mechanisms. You could conceivably implement a distributed system over a variety of different process, kernel thread, and pthread models and over a variety of different networking protocols (mostly over TCP/IP, and UDP, but also possible using direct, lower level, ethernet frames; or by implementing custom protocols over any other device).

- (I've heard of a protocol that was done over PC parallel parts; limited bandwidth but very low latencies! Reducing latency is often far more important in tightly coupled clusters than bandwidth).

So, the question:

What are concurrent processes how they work in distributed and shared systems?

... doesn't make sense (even if we ignore the poor grammar). I also don't know what a "shared system" is. It is also not a term of art.

On SMP (symmetrical multiprocessor) systems the Linux kernel initializes all available CPUs (processors) and basically let's them compete to run processes. Each CPU, at each 10ms context switch time scans the run list (the list of processes and kernel threads which are ready to run --- i.e. not blocked on I/O and not waiting or sleeping) and grabs a lock on it, and runs it for awhile. It's actually considerably more complicated than that --- since there are features that try to implement "processor affinity" (to insure that processes will tend to run on the same CPU from one context switch to another --- to take advantage of any L1 cache lines that weren't invalidated by the intervening processes/threads) and many other details.

However, the gist of this MP model is that processes and kernel thread may be executing in parallel. The context switching provides the "impression" (multi-tasking) that many processes are running "simultaneously" by letting each to a little work, so in aggregate they've all done some things (responded) on any human perceptible time scale.

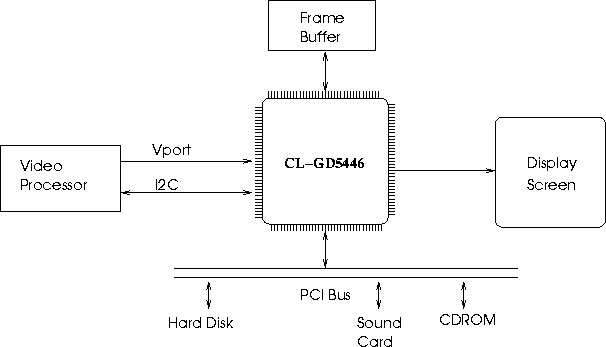

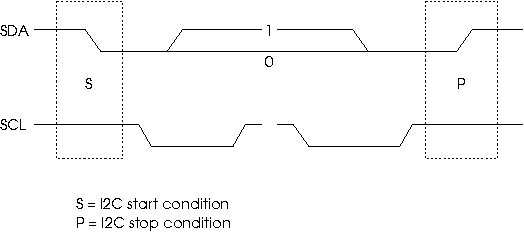

Obviously a "distributed" system has multiple processors (in separate systems) and thus runs processes on each of those "nodes" -- which is truly parallel. An SMP machine is a little like a distributed system (cluster of machines) except that all of the CPUs share the same memory and other devices. A NUMA (non-uniform memory access) system is a form of MP (multi-processing) where the CPUs share the same memory --- but some of the RAM (memory) is "closer" to some CPUs than to others (in terms of latency and access characteristics. In other words the memory isn't quite as "symmetrical." (However, an "asymmetric MP" system would be one where there are multiple CPUs that have different functions --- some some CPUs were dedicated to some sorts of tasks while other CPUs performs other operations. In many ways a modern PC with a high end video card is an example of an asymmetrical MP system. A modern "GPU" (graphical processing unit) has quite a bit of memory and considerable processor power of its own; and the video drivers provide ways for the host system to offload quite a bit of work (texturing, polygon shifting, scaling, shading, rotations, etc) unto the video card. (To a more subtle degree the hard drives, sound cards, ethernet and some SCSI, RAID, and firewired adapters, in a modern PC are other examples of asymmetric multi-processing since many of these have CPUs, memory and programs (often in firmware, but sometimes overridden by the host system. However, that point is moot and I might have to debate someone at length to arrive at a satisfactory distinction between "intelligent peripherals" and asymmetric MP. In general the phrase "asymmetric multi-processing" is simply not used in modern computing; so the "S" in "SMP" seems to be redundant).

Hey MAC, sign in before you login

Hey MAC, sign in before you loginFrom Carl Pender

Answered By Yann Vernier, Faber Fedor, Jay R. Ashworth, Ben Okopnik, Thomas Adam, Heather Stern

Hi, I have a Suse7.3 Linux PC acting as a gateway with an Apache server running. I have a web site set up and what I want to do is allow only certain MAC addresses onto the network as I choose. I have a script that adds certain MAC addresses onto the network which works perfectly if I type the MAC address in manually but I need to automate it. I'll nearly there I think but I need a little help.

Here's the question I asked someone on www.allexperts.com but unfortunately the person could [not] help me. Would you mind having a quick look at it and if anything jumps to your mind you might let me know.

Here goes.... I have a acript that matches an IP address with it's respective MAC address via the 'arp' command. The script is as follows:

#!/bin/bash

sudo arp > /usr/local/apache/logs/users.txt

sudo awk '{if ($1 =="157.190.66.1" print $3}'

/usr/local/apache/logs/users.txt |

/usr/local/apache/cgi-bin/add

Here is a typical output from the arp command:

Address HWtype HWaddress Flags Mask Iface 157.190.66.13 ether 00:10:5A:B0:30:ED C eth0 157.190.66.218 ether 00:10:5A:5B:6A:11 C eth0 157.190.66.1 ether 00:60:5C:2F:5E:00 C eth0

As you can see I send this to a text file from which I capture the MAC address for the respective IP address ("157.190.66.1") and then send this MAC address to another script, called "add", which allows this MAC address onto the network. This works perfectly when I do it from a shell with the ip address typed in maually.

My problem is that instead of actually typing in the IP address (e.g "157.190.66.1"), I want to be able to pipe the remote IP address of the user that is accessing my web page at the time to this script as an input.

In order to do this, I tried:

#!/bin/bash

read ip_address

sudo arp > /usr/local/apache/logs/users.txt

sudo awk '{if ($1 ==$ip_address) print $3}'

/usr/local/apache/logs/users.txt |

/usr/local/apache/cgi-bin/add

But I'm afraid this doesn't work. I'm wondering where I'm going wrong. I also tried putting quotations around the variable $ip_address but that doesn't work either. On my CGI script I have the line 'echo "$RENOTE_ADDR" | /usr/local/apache/cgi/bin/change' to pipe the ip address of the user. I know this is working because if I include the line 'echo "$ip_address"' in my script then the ip address is echoed to the screen

I hope that I have made myself clear.

Thanks Carl

[Yann] This is a rather simple case of quoting the wrong things. What you want is probably something like '{if ($1 =3D=3D"'"$ip_address"'") print $3}'

That is, first a " (two apostrophes) quote block making sure $1 and a " is passed on to awk unchanged, then a "" (two doublequotes) quote block keeping any spaces in $ip_address (not needed with your data, but good practice), then another " (two apostrophes) block with the rest of the line. The primary difference between " and "" as far as the shell is concerned is that $variable and such are expanded within "" but not within ".

Also, your script could be a lot more efficient, and doesn't need superuser privileges:

/usr/sbin/arp -n $ip_address|awk "/^$ip_address/ {print \$3}"

This isn't the most elegant solution either, but somewhat tighter. '$1 =3D=3D "'$ip_address'" {print $3}' works the same.

By the way, it's quite possible you don't need to write your own tools for a job like this, although it is a good way to learn. Have you examined arpwatch? (http://www-nrg.ee.lbl.gov and scroll down the page a bit)

Same fellow, slightly changed situation. -- Heather

![]() Hi I have a Suse 7.3 Linux PC acting as a gateway for

a wireless network. I have a script to allows users

onto the network depending on their MAC addresses and

another to stop them having access to the network.

Hi I have a Suse 7.3 Linux PC acting as a gateway for

a wireless network. I have a script to allows users

onto the network depending on their MAC addresses and

another to stop them having access to the network.

What I want to do is let them onto to the network and then 5 hours later, log them off again. I was told to use something like this:

#!/bin/bash /usr/local/apache/cgi-bin/add sleep 18000 /usr/local/apache/cgi-bin/remove

This is no good to me because if I put the program to sleep it will lock up. I cant have it locking up because then if another user logs on the program wll be locked up so they wont be able to access the net.

Do you habe any suggestions how to do this?

Thanking you in advance Carl Pender

[Faber] You don't say whether you want them to be logged off after five continuous hours of being logged in or to restrict them from being able to logon outside of a five hour period.

Either way, why not use the at command? In their ~/.profile, place a line that says something like

at +5 hours < /usr/local/apache/cgi-bin/remove this_mac_address

(RTFM To get exact syntax, your script may need a wrapper, etc.)

[Ben] It sounds a bit more complex than that, Faber (actually, the problem spec is mostly undefined but this sounds like a reasonable guess.) What happens if somebody logs on, spends 4 hours and 59 minutes connected, disconnects, then reconnects? Is it 5 hours in every 24, 5 hours from midnight to midnight, 5 hours a week, 5 cumulative hours, 5 contiguous hours?... There are various ERP packages that do this kind of thing, but they're pretty big - unfortunately, I can't think of anything small at the moment although logic says that there's got to be something.

[jra] ISTM one of the getty's has that stuff built in... or maybe it's xinetd.

For, as Ben says, some subset of the possible problem space.

![]() Well firstly, it a wireless Hot-spot kind of thing that

I'm trying to achieve here so the users dont have profiles.

Well firstly, it a wireless Hot-spot kind of thing that

I'm trying to achieve here so the users dont have profiles.

Secondly, I have a kind of "mock" billing system in place where the user enters credit card details (mock) and then they are allowed access onto the network for five hours. So I want them to be no longer have access to the network when that five hours has expired.

This is only for demonstartion purposes, so dont worry I'm not going to use this in a real life situation where I'll be handling credit card info.

I hope it is clearer now

Thanks Carl

[Ben] Perhaps you don't fully realize what you're asking for, Carl. Once you consider the degenerate cases of possible login schedules, you'll realize that this is a large, complex task (you can define it to be much simpler, but you haven't done so.)

[Thomas] Indeed, this is a security risk.... the closest I ever got to modifying the "login" sources was to make the password entry field echo "*"'s as one types in their password. I deleted it afterwards mind!

[Ben] Just as an example of a simple case, you could do this with PAM - which would take a fair bit of study on your part - by creating a one-time temporary account for each user that logs in. PAM would do a "runX" via "pam_filter" (read "The Linux-PAM System Administrators' Guide", http://www.kernel.org/pub/linux/libs/pam/Linux-PAM-html/pam.html) which would invoke an "at" session as Faber suggested. After the period elapses - or if the user logs off - the session and the user account get wiped out, and they would need to get reauthenticated by submitting a credit card or whatever.

I'm sure there are a number of other ways to accomplish similar things.

[Heather] I think the word he's looking for here is "authentication" - lots of coffee-shop or gamer-shop style connections have the cashier authorize folks to use the network, on stations that are wired in ... but wireless is different, you have to get one of these little scripts to pick out the new MAC address and then get a go-ahead to let them aboard.

PAM allows for writing new modules, lemme check this partial list of them (http://www.kernel.org/pub/linux/libs/pam/modules.html) for some sort of moderated-login thingy? Hmm, unless TACACS+. RADIUS or Kerberos offer something like that, looks like you'll need to whip up something on your own, and mess with the control files underlying pam_time, too. However, here's something topical, an Authentication Gateway HOWTO: http://www.itlab.musc.edu/~nathan/authentication_gateway

WHich just goes to show that there are more HOWTOs in the world than tldp.org carries. Juicy references to real-world use in the References too.

[Thomas] You might also want to consider making the process uninterruptable (i.e catch certain calls) until the process is due to expire. This again though has certain inherent security problems with it.

Secure CVS - SSH tunnel problem

Secure CVS - SSH tunnel problemFrom jonathan soong

Answered By Thomas Adam, Ben Okopnik, Jason Creighton, Kapil Hari Paranjape

Hi Gang,

I have been trying to install CVS securely on a machine that will be live on the Internet.

There are two ways i was hoping to secure it:

My problem is with (2) - securing pserver:

A common way of addressing this is to replace rsh with ssh, however

AFAIK this requires shell accounts

on the machine, a situation i _have to avoid.

[Thomas] Why? Creating a "dummy" account is easy enough.

![]() The solution i have which seems feasible is:

The solution i have which seems feasible is:

Using pserver's user management, tunnelled over ssh with a generic

ssh login and some sort of restricted shell.

I'm currently investigation this solution, however i'm not sure if there is a fundamental security flaw in this model, or what the restricted shell should look like.

I was wondering if you had any thoughts/opinions/suggestions on this? Or perhaps be able to point out a *much** easier way to secure it, that i missed!!

Any help would be much appreciated,

Jon

[Thomas] If CVS is the only thing that the "users" will be using, then it is conceivable that you can have a "generic" login via SSH whereby this "user" has CVS as its default $SHELL.

While I am not particularly sure of the security implications that my following suggestion has, I think that you could do something like this:

- Create a generic account

- edit "/etc/shells" and add at the bottom "/usr/bin/cvs"

- Save the file.

- change the generic user's shell.

(at this point, I am wondering whether or not it is a good idea to create a "wrapper" account for this "new" shell, something like:

See attached shellwrap.thomas.bash.txt

And saving it as "/sbin/cvsshell", which you could then add to "/etc/shells" instead?

[Ben] What happens when somebody suspends or "kill -9"s the shell? What new attack scenarios can you expect from this? What would happen if a local user launched this shell after hosing an environment variable (/a la/ the emacs/IFS attack scenario from the old days)?

[Thomas] Errrm, I guess my answer to this is a bleak one...

[Ben] It's probably best to just launch _shells that way and let those guys answer this kind of questions.

[Thomas] Aye...

(Details of step 4.) That way when the user is created,

- Edit "/etc/passwd"

- find the newly created user

- edit "/bin/bash" to "/sbin/cvsshell" (without quote signs mind you)

- and save the file.

Then you can use "ssh" to login into the newly created user and the default shell would be CVS by default.

I'm not sure how secure this would be.......

Using "rbash" is not an option in this case.

In almost-as-we-hit-the-press news, it looks like pserver doesn't require the local user to have a useful shell, so /bin/false should work. According to the querent, anyway. I'm not preceisely sure of the configuration on the pserver side that leads to that, though. -- Heather

[Thomas] Before using this, I am sure other people will flame me for it (hey Ben)

but.......it is a learning curve for me too

[Ben] Don't look at me, buddy. It's been at least, what, an hour since I've flamed you? I'm still in my refractory period.

[Thomas] LOL, an hour? Is that all?? Things are looking up for me then

Hmmm, it was just an idea..... I'm curious as to whether it would work, minus some of the security implications......

[Ben] To querent: I've never used CVS over SSH, etc., but you might want to take a look at "scponly" <http://www.sublimation.org/scponly/>;. It's designed for the kind of access you're talking about (if I understood you correctly), and is very flexible WRT user management (one anonymous user is fine, so are multi-user setups.)

![]() Hi guys,

Hi guys,

Thanks for your help, i decided to implement it like so:

SECURE CVS without multiple unix accounts

Now only those developers who have sent you keys will be able to login (passwordless) to the CVS machine and will be automatically be dumped to sleep for 3 hours - this will keep the ssh port forward open.

[Thomas] Sounds like a good idea this way.

![]() Now i can securely use CVS's pserver user management, without multiple

unix users.

Now i can securely use CVS's pserver user management, without multiple

unix users.

Anyone have any thoughts on the security implications of forcing the users to execute 'sleep 3h' e.g. can this be broken by sending weird signals?

[Thomas] Assuming that the command "sleep 3h" is spawned once the user logs in, then as with any process this can be killed by doing:

kill -9 $(pidof "sleep 3h")

(I have seen the command "pidof" on Debian, SuSE and RH -- it might not be distributed with Slackware as this claims to be more POSIX compliant, something that "pidof" is not).

[Jason] Sure enough, slackware 8.1 has this command: (And, just for the record, Slackware is more BSD-ish. I've never heard a claim that it is more POSIX compliant.)

~$ about pidof /sbin/pidof: symbolic link to killall5 /sbin/killall5: ELF 32-bit LSB executable, Intel 80386, version 1 (SYSV), dynamically linked (uses shared libs), stripped ~$ greppack killall5 sysvinit-2.84-i386-19

(Of course, to use the 'about' and 'greppack' scripts, you'd have to ask me to post them.)

Last I recall POSIX was a stnadard that declared minimum shell and syscall functionality, so I don't see why it would insist on having you leave a feature out. In fact "minimum" is the key since merely implementing POSIX alone doesn't get a usable runtime environment, as proved by Microsoft. -- Heather

[Thomas] The more traditional method, is to use something like....

kill -9 $(ps aux | grep "sleep\ 3h" | grep -v "sleep\ 3h" | awk '{print

$2}'

If this happens then the rest of your command will fail.

The security implications of this, is that the rest of the command will never get executed. I came up with a "bash daemon" script three years ago that would re-spawn itself by "exec loop4mail $!" which used the same process number as the initial "loop4mail &" command.

Security was not paramount in that case.

If the command is killed, then the users will most likely be left dangling at the Bash prompt.....

[Ben] Well, the "about" script is rather obvious,

[Jason] Basically, the only thing it does is follow symlinks recursivly, and calls "file" with a full list.

[Thomas] Hmmm, I have a similar script to yours that you describe here, Jason, except that mine "traverses" the symlinks until file returns anything != to another symlink. If it does, then it keeps traversing.

[Jason] Okay, I think I see what you're saying now: A symlink will never point to more than one thing. Therefore, we could solve the problem with a loop, breaking out of it when there are no more symlinks to process. Recursion is not required.

Hmm... that's interesting. However, I already wrote the recursive version already, so I'll stick with that.

If a symlink doesn't point to anything, it will fail a test for file existance:

~/tmp$ ln -s doesnotexist symlink ~/tmp$ ls -l total 0 lrwxrwxrwx 1 jason users 12 May 27 10:46 symlink -> doesnotexist ~/tmp$ [ -e symlink ] && echo "symlink exists" ~/tmp$

Circular symlinks are fun too.......

[Thomas] My logic in this is simple in that a symlink must point to a physical store of data, albeit a directory, file, block file, etc. Also, you might want to look at the program "chase" which is rather useful in these situations too.

[Jason] Haven't heard of that one and it's not on my system.

[Kapil] Two programs that are useful to traverse symlinks come with standard distributions: namei (util-linux) and readlink (coreutils/fileutils)

$ namei /usr/bin/vi

Gives

f: /usr/bin/vi d / d usr d bin l vi -> /etc/alternatives/vi d / d etc d alternatives l vi -> /usr/bin/nvi d / d usr d bin - nvi

While

$ readlink -f /usr/bin/vi

Gives

/usr/bin/nvi

[Thomas] This feature might be superfluous to your initial script, but I find it quite useful. "find" is a very powerful utility.

So I shall extend you the same offer, and say that I'll post you my script, if you like....

[Ben] ...but "greppack" has to do with Slackware's package management...

[Jason] Bingo. All it does is print the name of a file if a regex matches somewhere in it, because Slackware's package "management" is quite simple.

[time passes]

I was just looking at the options for 'grep' and it turns out that I could just call grep, like so:

grep killall5 -l /var/log/packages/*

'-l' causes grep to print the names of the files that match, not the lines that match.

Jason Creighton, CEO of Wheel Reinvention Corp.

(Our motto: "Code reuse is silly")

[Ben] ... and so would not be anything like Debian - where you'd just do "dpkg -S killall5" to find out the package it came from. I'll say this: in almost everything I've ever thought to ask of a packaging system, between "dpkg", "apt-get", and "apt-cache", Debian has a good, well-thought-out answer. The one thing that's not handled - and I don't really see how it could be without adding about 5MB that most folks would never use - is looking up a file that's in the Debian distro but is not installed on my system. I handle that by downloading the "Contents-i386.gz" file once every few months and "zgrep"ping through it; it's saved my bacon many, many times when a compile went wrong.

[Kapil] To make this lookup faster you may want to install "dlocate" which is to "dpkg" (info part) what "locate" is to "find".

[Ben] Cool - thank you! That was my one minor gripe about "dpkg" - on my system, it takes about 20 seconds (which is years in computer time

to look things up.

[Kapil] And for those with network connectivity:

http://packages.debian.org

Contains a search link as well.

[Ben] Unfortunately, that does not describe me very well.

Otherwise, I'd just have written a little Perl interface to the search page and been done with it. Instead, I download a 5MB or so file when I have good connectivity so I have it to use for the rest of the time.

|

...making Linux just a little more fun! |

By Michael Conry |

|

Contents: |

Submitters, send your News Bytes items in PLAIN TEXT format. Other formats may be rejected without reading. You have been warned! A one- or two-paragraph summary plus URL gets you a better announcement than an entire press release. Submit items to gazette@ssc.com

June 2003 Linux Journal

June 2003 Linux Journal

![[issue 110 cover image]](misc/bytes/lj-cover110.png) The June issue of Linux

Journal is on newsstands now.

This issue focuses on Program Development. Click

here

to view the table of contents, or

here

to subscribe.

The June issue of Linux

Journal is on newsstands now.

This issue focuses on Program Development. Click

here

to view the table of contents, or

here

to subscribe.

All articles older than three months are available for

public reading at

http://www.linuxjournal.com/magazine.php.

Recent articles are available on-line for subscribers only at

http://interactive.linuxjournal.com/.

OpenForum and Software Patents

OpenForum and Software Patents

A false or misled 'open source representative' has signed an industry resolution calling for the EU to allow software patenting, which has been sent to members of the European Parliament...In an open letter, Graham Taylor, director of OpenForum Europe, rejected Perens' interpretation. Taylor made the point that OpenForum Europe only had a brief to represent its members, largely composed of businesses and corporations, and did not seek or claim to represent the wider Free Software or Open Source communities. It is questionable whether this distinction was equally clear to other readers of the initial letter.

SCOundrels?

SCOundrels?

As readers are surely aware, SCO (the software company formerly known as Caldera) has launched a hostile legal attack against IBM in particular, and indeed against the GNU/Linux community as a whole. Although the details will remain somewhat obscured until the case is thrashed out in court, it appears that SCO is alleging that IBM took code it had licensed from SCO (for AIX) and showed it to Linux kernel developers. It was access to this code that allowed GNU/Linux to become the stable and powerful operating system it is today... or so the story goes. The entire suit can be read at SCO's website.

This has lead to some bizarre situations, such as SCO threatening to sue it's partners in the UnitedLinux project, and the suspension of its own GNU/Linux related activities. One can only guess at how this plays with SCO's GNU/Linux customers who have now been marooned in a dubious legal situation. Perhaps they could sue SCO, since SCO was illegally selling intellectual property SCO owned (or something!).

To try and make some sense of this situation, it is useful to read Eric Raymond's OSI position paper on the topic. This document is a fine read, and gives an interesting overview of Unix history as related to the legal case. It would appear that there are one or two inconsistencies, inaccuracies and perhaps outright lies and deceptions in SCO's claims. Some of this madness is further highlighted in Linux Weekly News's account of SCO's refusal to come clean with details of what code infringes on their intellectual property (at least without signing a nondisclosure agreement). SCO CEO Darl McBride is quoted as saying:

"The Linux community would have me publish it now, (so they can have it) laundered by the time we can get to a court hearing. That's not the way we're going to go."But as LWN points out

"The Linux community, of course, would be incapable of "laundering" the code, since it is, according to SCO, incapable of implementing (or reimplementing) anything so advanced without stealing it.One has to wonder who was responsible for stealing the "intellectual" part of SCO's intellectual property.

...

Such a series of events would not change SCO's case in any way, however. If IBM truly misappropriated SCO's code, that act remains. And it is an act that cannot be hidden; the evidence is distributed, beyond recall, all over the Internet. And all over the physical world as well.

How this will all pan out is anybody's guess. It is certain that the story has some way to run yet. Further spice was added to the mix by Microsoft's decision to license SCO software leading to suspicions that they were attempting to bankroll SCO's legal adventures and help to undermine confidence in Free and Open Source software. Reports that SCO has destroyed archives of the Caldera-Microsoft antitrust lawsuit documentation have fuelled such speculation. Novell weighing in and claiming ownership of the contested code has further confused matters. An interesting development is the granting by German courts of an injunction preventing SCO from saying (in Germany) that Linux contains illegally obtained SCO intellectual property.

Probably the best course of action is that proposed by Ray Dessen on the Debian Project lists and reported by Debian Weekly News

"the issue so far consists of allegations and rumors from a company that is far along the way to obsolescence. They have yet to produce anything that could be remotely considered evidence, while there have been concrete indications of SCO itself violating the GPL by the inclusion of GPLed filesystem code from the Linux kernel into its proprietary (Unixware?) kernel."This "wait and see" approach is also the one taken by Linux Torvalds. If you want to be more active, you could start shouting "Hey SCO, Sue Me" or answer Eric Raymond's request for information

Some interesting articles from the O'Reilly stable of websites:

IBM Developerworks overview on the Linux /proc filesystem.

>From The Register:

Open Source Digest introduction to SkunkWeb (continues in part 2

>From Linux Journal:

Some interesting links found via Linux Today:

And some links from NewsForge:

Listings courtesy Linux Journal. See LJ's Events page for the latest goings-on.

|

CeBIT America | June 18-20, 2003 New York, NY http://www.cebit-america.com/ |

|

ClusterWorld Conference and Expo | June 24-26, 2003 San Jose, CA http://www.clusterworldexpo.com |

|

O'Reilly Open Source Convention | July 7-11, 2003 Portland, OR http://conferences.oreilly.com/ |

|

12th USENIX Security Symposium | August 4-8, 2003 Washington, DC http://www.usenix.org/events/ |

|

HP World | August 11-15, 2003 Atlanta, GA http://www.hpworld.com |

|

LinuxWorld UK | September 3-4, 2003 Birmingham, United Kingdom http://www.linuxworld2003.co.uk |

| Linux Lunacy | September 13-20, 2003 Alaska's Inside Passage http://www.geekcruises.com/home/ll3_home.html |

|

Software Development Conference & Expo | September 15-19, 2003 Boston, MA http://www.sdexpo.com |

|

PC Expo | September 16-18, 2003 New York, NY http://www.techxny.com/pcexpo_techxny.cfm |

|

COMDEX Canada | September 16-18, 2003 Toronto, Ontario http://www.comdex.com/canada/ |

|

IDUG 2003 - Europe | October 7-10, 2003 Nice, France http://www.idug.org |

|

LISA (17th USENIX Systems Administration Conference) | October 26-30, 2003 San Diego, CA http://www.usenix.org/events/lisa03/ |

|

HiverCon 2003 | November 6-7, 2003 Dublin, Ireland http://www.hivercon.com/ |

|

COMDEX Fall | November 17-21, 2003 Las Vegas, NV http://www.comdex.com/fall2003/ |

IBM Announces New Grid Offerings, Partners to form Grid

EcosysteM

IBM Announces New Grid Offerings, Partners to form Grid

EcosysteM

IBM has announced new offerings to further expand Grid computing into commercial enterprises, including the introduction of new solutions for four industries - petroleum, electronics, higher education and agricultural chemicals. In addition IBM announced that more than 35 companies, including networking giant Cisco Systems, will join IBM to form the foundation of a Grid ecosystem that is designed to foster Grid computing for businesses.

IBM is working with Royal Dutch Shell to speed up the processing of seismic data. The solution, based on IBM eServer xSeries running Globus and GNU/Linux, cuts the processing time of seismic data while improving the quality of the data. IBM also announced RBC Insurance and Kansai Electric Power as new Grid customers.

Geek fair

Geek fair

Free Geek is a 501(c)(3) non-profit organization based in Portland, Oregon, that recycles used technology to provide computers, education and access to the internet to those in need in exchange for community service.

They are organizing a GEEK FAIR (version 3.0) which will take place Sunday, June 29th Noon to 6pm at 1731 SE 10th Avenue Portland, Oregon. The free community block party will include Hard Drive Shuffleboard, Live Music, Square Dancing, Food, Sidewalk Sale, Funny Hats.

Obviously most readers (worldwide) will have geographical problems attending this particular event, but maybe it will give people ideas to organise something similar more locally.

GELATO Federation

GELATO Federation

Overwhelming interest in running GNU/Linux on Itanium processors has helped to double membership in the Gelato Federation to 20 institutions. Gelato is a worldwide collaborative research community of universities, national laboratories and industry sponsors that is dedicated to providing scalable, open-source tools, utilities, libraries and applications to accelerate the adoption of GNU/Linux on Itanium systems.

Gelato's technical foci are determined by the members and sponsors, and collaborative work is conducted through the Gelato portal. Portal activity has tripled in the past two quarters, reflecting the momentum in membership growth. Recent member software made available through the Gelato portal includes two contributions from CERN: GEANT4, a toolkit for the simulation of the passage of particles through matter; and CLHEP, a class library for high-energy physics; and one from Gelato Member NCAR: the Spectral Toolkit, a library of multithreaded spectral transforms.

College Linux

College Linux

Tux goes to college. Russell Pavlicek of NewsForge reports on College Linux, which has been developed in Robert Kennedy College, Switzerland. The distro has quite an important place in the operation of the college as some students study entirely via the internet.

Debian

Debian

Debian Weekly News reported that The miniwoody CD, which offers a stripped down variant of Debian woody, has been renamed to Bonzai Linux.

SuSE

SuSE

The SuSE Linux CGL Edition is available at no charge as a Service Pack to SuSE Linux Enterprise Server 8 customers. CGL incorporates technologies defined by the OSDL's Carrier Grade Linux Working Group, an initiative whose members include SuSE, HP, IBM, Intel and leading Telecom and Network Equipment providers.

UnitedLinux

UnitedLinux